Overview

We introduce a deep learning model that predicts super-resolved versions of diffraction-limited microscopy images. Our model, named Task-Assisted Generative Adversarial Network (TA-GAN), incorporates an auxiliary task (e.g. segmentation, localization) closely related to the observed biological nanostructures characterization. We evaluate how TA-GAN improves generative accuracy over unassisted methods using images acquired with different modalities such as confocal, brightfield (diffraction-limited), super-resolved stimulated emission depletion, and structured illumination microscopy. The generated synthetic resolution enhanced images show an accurate distribution of the F-actin nanostructures, replicate the nanoscale synaptic cluster morphology, allow to identify dividing S. aureus bacterial cell boundaries, and localize nanodomains in simulated images of dendritic spines. We expand the applicability of the TA-GAN to different modalities, auxiliary tasks, and online imaging assistance. Incorporated directly into the acquisition pipeline of the microscope, the TA-GAN informs the user on the nanometric content of the field of view without requiring the acquisition of a super-resolved image. This information is used to optimize the acquisition sequence, and reduce light exposure. The TA-GAN also enables the creation of domain-adapted labeled datasets requiring minimal manual annotation, and assists microscopy users by taking online decisions regarding the choice of imaging modality and regions of interest.Source Code

The source code is publicly available on GitHub.

Paper

Bouchard, C., Wiesner, T., Deschênes, A., Bilodeau, A., Lavoie-Cardinal, F. & Gagné, C. Resolution Enhancement with a Task-Assisted GAN to Guide Optical Nanoscopy Image Analysis and Acquisition. bioRxiv (2023).

The preprint is available here.

Datasets

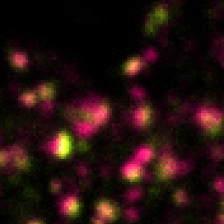

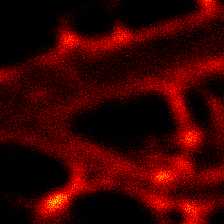

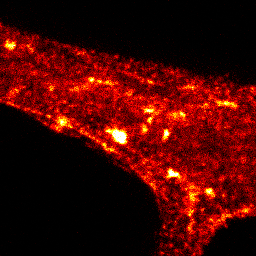

We provide the datasets used in the paper. Just click on an image to download the pre-processed datasets with train/valid/test splits.

If you use the provided dataset please cite the following papers: Neuronal activity remodels the F-actin based submembrane lattice in dendrites but not axons of hippocampal neurons for the Axonal Rings dataset and the Dendritic F-actin Rings and Fibers Dataset, Activity-Dependent Remodeling of Synaptic Protein Organization Revealed by High Throughput Analysis of STED Nanoscopy Images for the Synaptic Proteins Dataset, Resolution Enhancement with a Task-Assisted GAN to Guide Optical Nanoscopy Image Analysis and Acquisition for Live F-Actin and Translated F-Actin. These datasets are available for general use by academic or non-profit, or government-sponsored researchers. This license does not grant the right to use these datasets or any derivation of it for commercial activities.

Dataset

Dataset

Dataset

Dataset

Dataset (synthetic)

Models

All the trained models that were used for the results presented in the paper are available on the project's GitHub repository, except the weights for the segmentation model for live F-actin (U-Net_live) which are available to download here. These pretrained weights were used to train TA-GAN_live and generate all segmentation predictions for live-cell images (Figures 3d, 3f, 4b, 5a, supplementary figures 12, 13, 14, 15, 16, 17, 18, 19, 20). These weights were also used in the acquisition loop of the STED microscope for TA-GAN assistance. The weights file is not included in the project's GitHub repository simply because the file is too large (167.3 MB).

Acknowledgments

Francine Nault and Sarah Pensivy for neuronal cell culture. Gabriel Leclerc for the FIJI macro for segmentation. Annette Schwerdtfeger for proofreading the manuscript. Funding was provided by grants from the Natural Sciences and Engineering Research Council of Canada (NSERC) (F.L.C. and C.G.), the Canada First Research Excellence Fund (F.L.C. and C.G.), the Canadian Institute for Health Research (CIHR) (F.L.C.), and the Neuronex Initiative (National Science Foundation 2014862, Fond de recherche du Québec - Santé) (F.L.C.). C.G. is a CIFAR Canada AI.